live streaming to 5,000 concurrent viewers

and how i found out about mutexes and file descriptor limits

Recently, some friends and I had to set up a system for relaying a live stream to quite a few viewers, but we couldn't rely on YouTube, Twitch, or any of the other big bois. And so, we set up our own webpage to stream the content.

Before I go into the details, allow me to explain how live streams are set up.

how live streams are usually done

There's actually quite a few ways on doing live streams, HLS, DASH, WebRTC, and probably a few others I don't know about. But the most common one is HLS because that's what Apple uses and because they refuse to implement DASH.

Both YouTube and Twitch uses HLS. What happens behind the scenes is your

browser gets a playlist URL, like /stream/index.m3u8, whose

contents usually look something like this:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:2

#EXT-X-MEDIA-SEQUENCE:1

#EXTINF:2.000,live

segment001.ts

#EXTINF:2.000,live

segment002.ts

#EXTINF:2.000,live

segment003.ts

It's a list of .ts files, which contain a small segment of

video and audio, usually a few seconds in length. Every once in a while,

the browser will re-fetch the index.m3u8 file and there'll

be a new list of .ts files, and the video player will just

transparently combine the segments into one continuous video stream.

So each viewer in the stream will continuously make requests to the webserver for the updated m3u8 file and the segment files as long as the stream is going. If you're aiming for lower latency, the segments will be shorter in duration and the viewer will make more requests to compensate for that.

Now imagine that, but with a lot of viewers. You're basically intentionally DDoS-ing yourself.

scaling it up

Running the whole thing on a single server would probably work, but we wanted to have mirrors for the stream that's geographically distributed, so viewers in different regions can get a copy of the stream that's closer to them for lower latency.

There's a few fun ways to do that. The most fun one I can think of is doing anycast, which is when multiple hosts share the same IP address, and so the load-balancing is done by the network. I really wanna play around with it but you need to own an ASN for that and the process seems really tedious and expensive. On top of that, you'd also need to find a cloud provider that allows for broadcasting BGP, and the choices for that are pretty limited.

The more realistic and practical option would be to just provide a list of mirrors to the client, and let the client choose which one has the lowest ping. And so that's what I went with.

setting up the mirrors

What I'm doing for the mirrors is basically tiered caching. There's a

single "upstream" server that's running ffmpeg to write the

.m3u8 and .ts files to disk, and those files are served over the

internet with nginx.

The mirror servers are also running nginx, with

proxy_pass to forward the requests to the upstream server,

and proxy_cache to cache the files so the requests don't

have to go to the upstream every time. Because the .m3u8 file changes

often, I set them to be cached for 1 second, and the .ts files to be

cached for a bit longer.

Initially, we included the upstream server in the list of mirrors that the clients could connect to, but quickly found out that it's a bad idea as the other mirrors sometimes couldn't get the segments on time, and would cause the stream to stutter.

In the end, we set up a separate server to be a dedicated upstream, and only exposed the mirror servers to the clients. That improved the stream a whole lot.

counting viewers

I wanted to have a live viewer count too. Initially I thought about using websockets, but that seemed a bit excessive. In the end I went with a basic webserver I wrote in golang. The client makes a request to the server once every 5 seconds, and receives the viewer count in return.

The server, upon receiving the request, inserts the client's IP into a map and a timestamp of when they made the request. Once every 5 seconds, a goroutine goes through the map and removes IPs that haven't made a request in the last 10 seconds. Easy!

Well, until it got deployed and I start seeing

fatal error: concurrent map writes in the logs and seeing

the server crash-looping. Uh-oh. Turns out I was reading and writing to

the map of IPs directly from multiple threads without using any mutexes,

and that's bad!

Anyway I fixed it and then the server kept running and counting the thousands of people watching. Until it doesn't anymore.

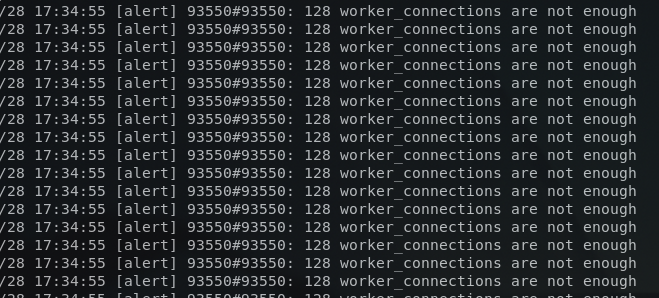

A new problem emerged: nginx couldn't handle the number of connections!

And so I bumped that up a bit. And I thought that's done. And then I got another error:

[...] socket() failed (24: Too many open files) while connecting to upstream [...]Turns out I ran out of file descriptors. So I bumped it up and yay things started running nicely!

here's some fun graphs

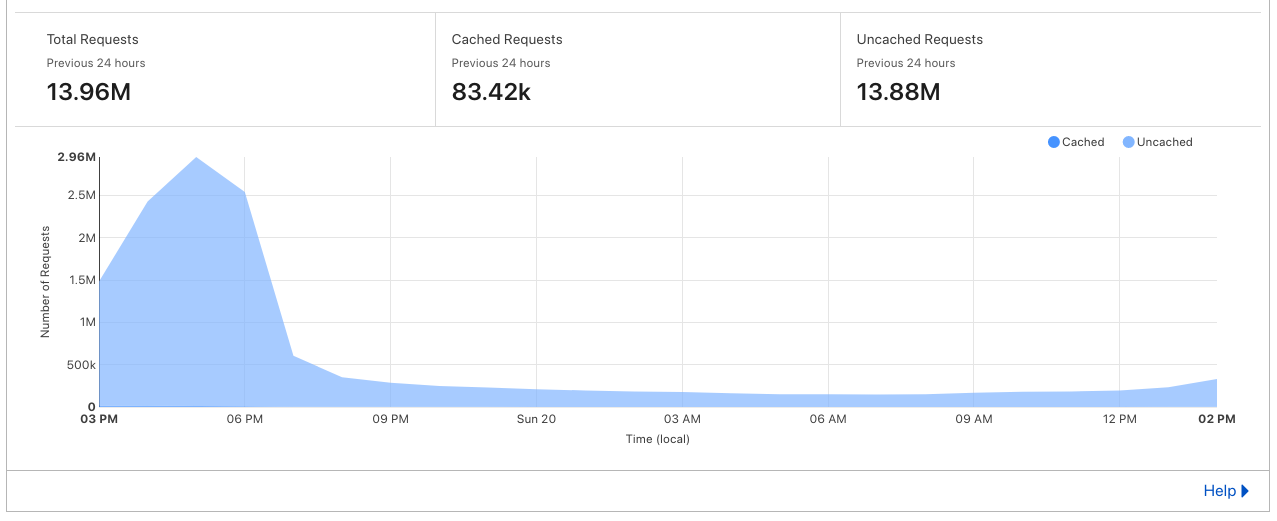

The webserver for counting viewer numbers sat behind cloudflare, here's what it looked like:

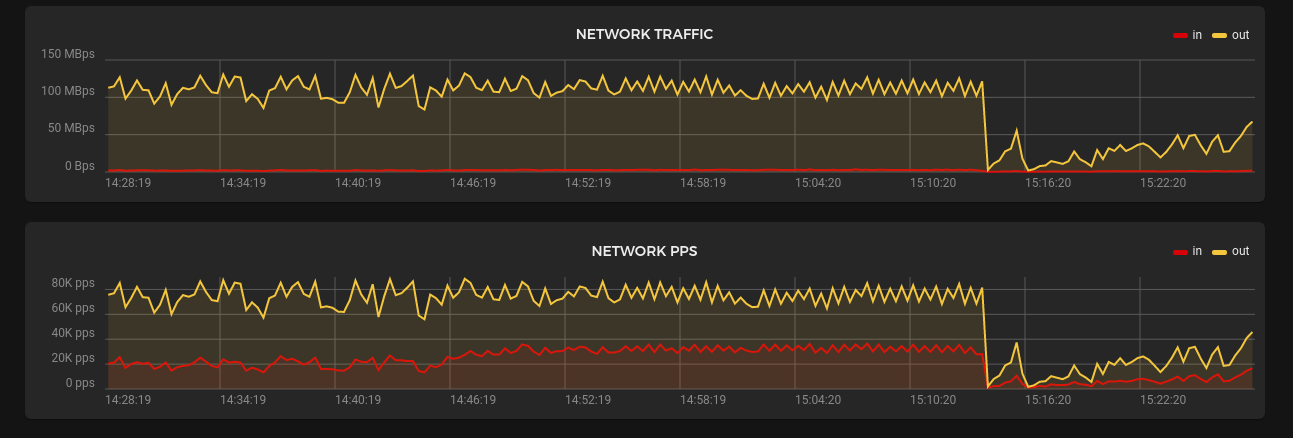

And here's the traffic graph for one of the mirrors:

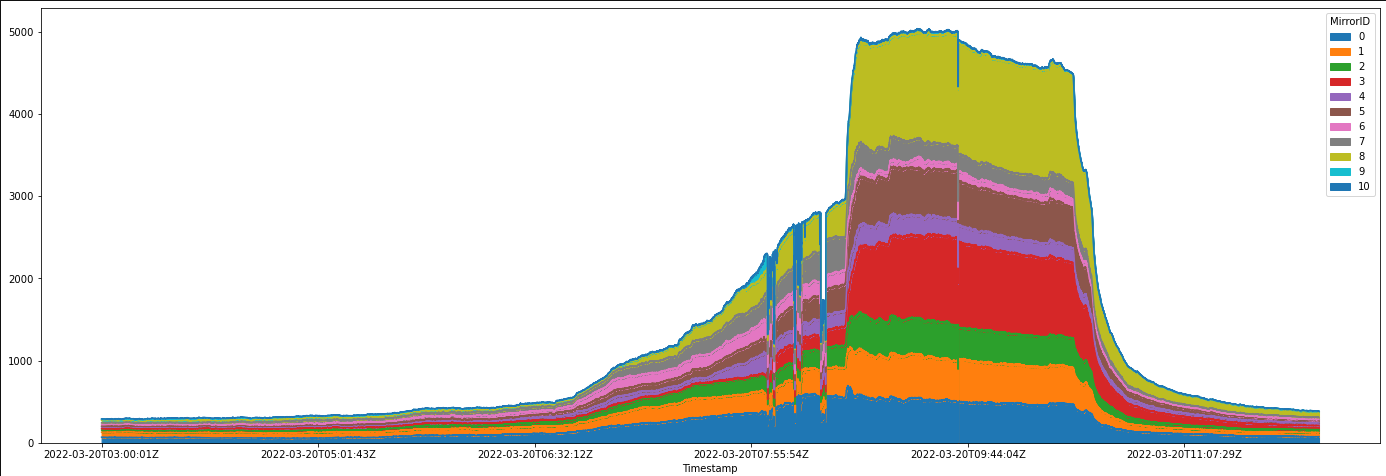

And here's the graph for the viewer count. Those holes in the graph is when the webserver started dying.

results

It was pretty cool being able to say I ran a server that handled 1k requests/sec once. I compiled the scripts I used and my findings in this git repo, so I can reuse them in the future in case I need to do something like this again.